The appetite for hardware to train AI models is voracious.

AI chips are forecast to account for up to 20% of the $450 billion total semiconductor market by 2025, according to McKinsey. And The Insight Partners projects that sales of AI chips will climb to $83.3 billion in 2027 from $5.7 billion in 2018, a compound annual growth rate 35%. (That’s close to 10 times the forecast growth rate for non-AI chips.)

Case in point, Tenstorrent, the AI hardware startup helmed by engineering luminary Jim Keller, this week announced that it raised $100 million in a convertible note funding round co-led by Hyundai Motor Group and Samsung Catalyst Fund.

Indeed, $50 million of the total came from Hyundai’s two car-making units, Hyundai Motor ($30 million) and Kia ($20 million), which plan to partner with Tenstorrent to jointly develop chips, specifically CPUs and AI co-processors, for future mobility vehicles and robots. Samsung Catalyst and other VC funds, including Fidelity Ventures, Eclipse Ventures, Epiq Capital and Maverick Capital, contributed the remaining $50 million.

Unlike equity, a convertible note is short-term debt that converts to equity upon some predetermined event. Why Tenstorrent opted for debt over equity isn’t entirely clear — nor is the company’s post-money valuation. (Tenstorrent described it as an “up-round” in a release.) Tenstorrent last raised $200 million at a valuation eclipsing $2 billion.

The convertible note tranche, which had participation from Fidelity Ventures, Eclipse Ventures, Epiq Capital, Maverick Capital and more, brings Tenstorrent’s total raised to $334.5 million. Keller says it’ll be put toward product development, the design and development of AI chiplets and Tenstorrent’s machine learning software roadmap.

Toronto-based Tenstorrent sells AI processors and licenses AI software solutions and IP around RISC-V, the open source instruction set architecture used to develop custom processors for a range of applications.

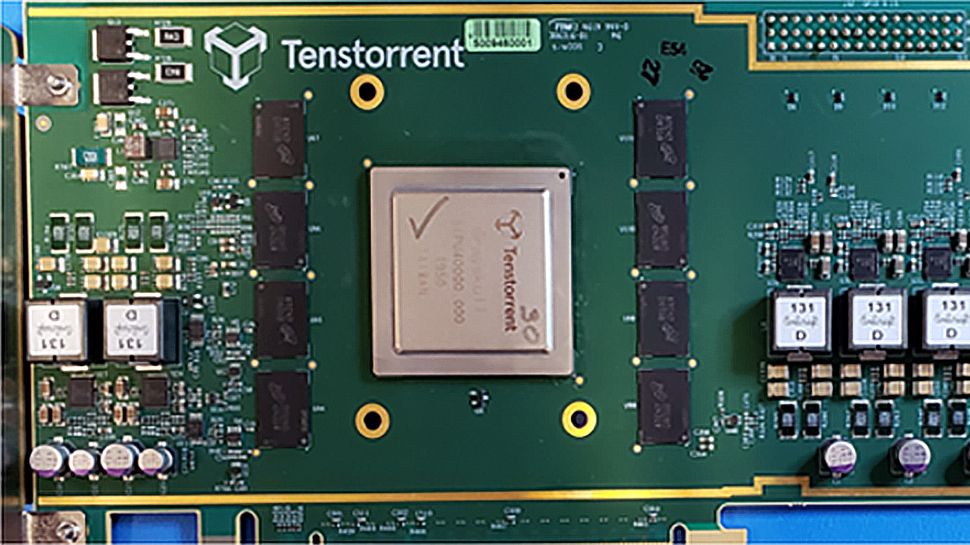

A top-down view of Tenstorrent’s custom-designed hardware for AI processing.

Founded in 2016 by Ivan Hamer (a former embedded engineer at AMD), Ljubisa Bajic (the ex-director of integrated circuit design at AMD) and Milos Trajkovic (previously an AMD firmware design engineer), Tenstorrent early on poured the bulk of its resources into developing its own in-house infrastructure. In 2020, Tenstorrent announced Grayskull, an all-in-one system designed to accelerate AI model training in data centers, public and private clouds, on-premises servers and edge servers, featuring Tenstorrent’s proprietary Tensix cores.

But in the intervening years, perhaps feeling the pressure from incumbents like Nvidia, Tenstorrent shifted its focus to licensing and services and Bajic, once at the helm, slowly transitioned to an advisory role.

In 2021, Tenstorrent launched DevCloud, a cloud-based service that lets developers run AI models without first having to purchase hardware. And, more recently, the company established partnerships with India-based server system builder Bodhi Computing and LG to build Tenstorrent’s products into the former’s servers and the latter’s automotive products and TVs. (As a part of the LG deal, Tenstorrent said it would work with LG to deliver improved video processing in Tenstorrent’s upcoming data center products.)

Tenstorrent — nothing if not ambitious — opened a Tokyo office in March to expand beyond its offices in Toronto as well as Austin and Silicon Valley.

The question is whether it compete against the other heavyweights in the AI chip race.

Google created a processor, the TPU (short for “tensor processing unit”), to train large generative AI systems like PaLM-2 and Imagen. Amazon offers proprietary chips to AWS customers both for training (Trainium) and inferencing (Inferentia). And Microsoft, reportedly, is working with AMD to develop an in-house AI chip called Athena.

Nvidia, meanwhile, briefly became a $1 trillion company this year, riding high on the demand for its GPUs for AI training. (As of Q2 2022, Nvidia retained an 80% share of the discrete GPU market.) GPUs, while not necessarily as capable as custom-designed AI chips, have the ability to perform many computations in parallel, making them well-suited to training the most sophisticated models today.

It’s been a tough environment for startups and even tech giants, unsurprisingly. Last year, AI chipmaker Graphcore, which reportedly had its valuation slashed by $1 billion after a deal with Microsoft fell through, said that it was planning job cuts due to the “extremely challenging” macroeconomic environment. Meanwhile, Habana Labs, the Intel-owned AI chip company, laid off an estimated 10% of its workforce.

Complicating matters is a shortage in the components necessary to build AI chips. Time will tell, as it always does, which vendors come out on top.